Executive Summary

Streamline travel operations with a cloud-based app that automates bookings, document checks, and vendor alerts—boosting compliance and cutting turnaround time.

The travel coordinator struggled with an overload of unread emails—many of them self-reminders—and spent hours each week managing spreadsheets and chasing manual follow-ups. After recognising these inefficiencies in her travel operations, she approached us for a smarter solution.

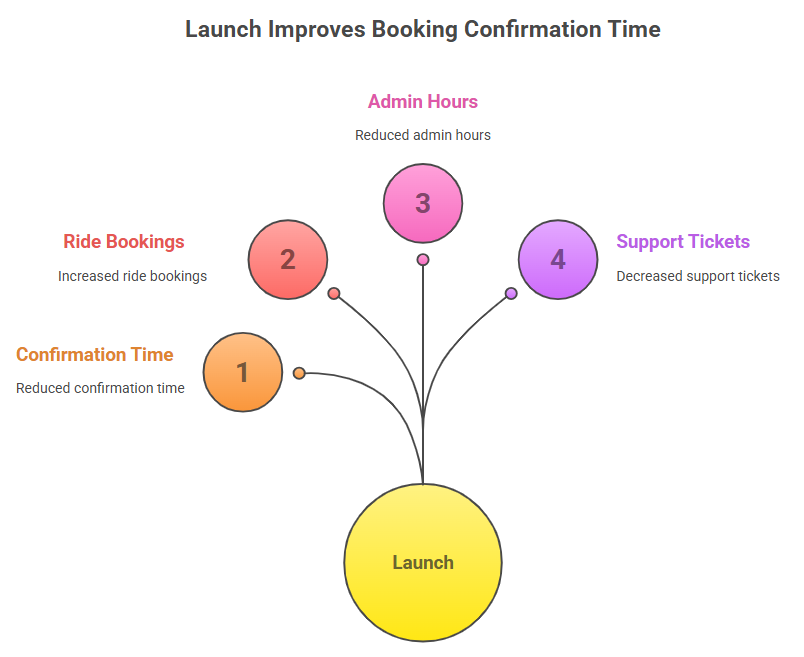

We developed a secure browser-based portal and a cross-platform mobile app, hosted on the cloud, to handle itinerary requests, document checks, and vendor confirmations with speed and reliability. Shortly after launch, the team reclaimed significant time, reduced booking turnaround, and avoided missed document-expiry alerts—freeing the coordinator to focus on strategic travel planning instead of reactive operations.

Challenges – Manual Travel Operations Before Automation

The consulting team relied on shared spreadsheets, exported calendars, and a constant stream of reminder emails. Unclear vessel codes caused double bookings, while storing passports and visas in inboxes led to last-minute cancellations when expiry dates went unnoticed. “I spent my mornings chasing emails instead of planning,” the coordinator recalled, after missing a critical booking during a weekend drill. The limitations of manual travel operations were clear.

Why Choose Our Cloud-Based Travel Automation Solution

They selected our team for our quick delivery of a working proof of concept, proven expertise integrating with collaboration tools, and our promise to handle everything—from cloud setup to web and mobile app deployment.

Project Snapshot – Travel App Development for SMEs

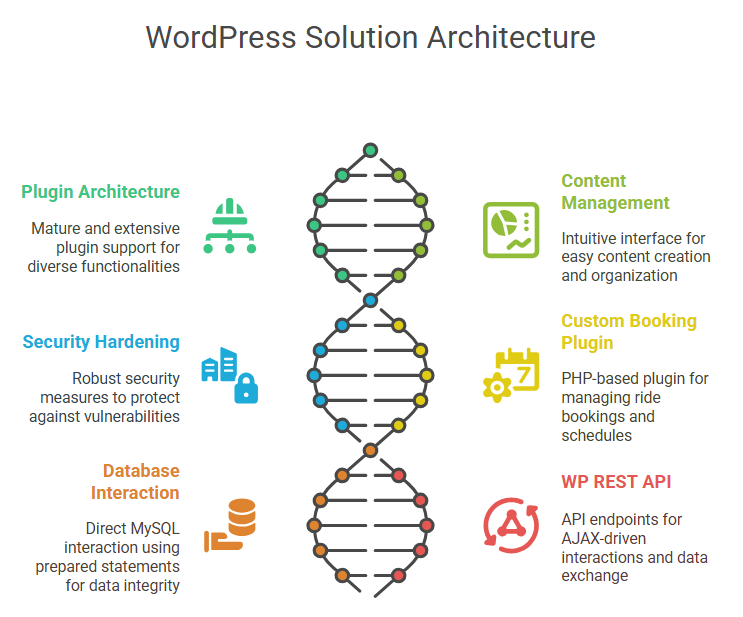

The project ran from March to August 2022. We designed it to be cost-effective for SMEs while supporting scalability and modern operational demands. Our tech stack included Flutter for mobile, Angular for the web, and FastAPI for the backend.

| Aspect | Details |

| Service | Mobile APP, And Web APP Aplication |

| Technology | Flutter, Angular and Fast API |

| Period | March 2022 to August 2022 |

| Budget | Designed to be cost-effective for SMEs while supporting scalability and modern operational needs |

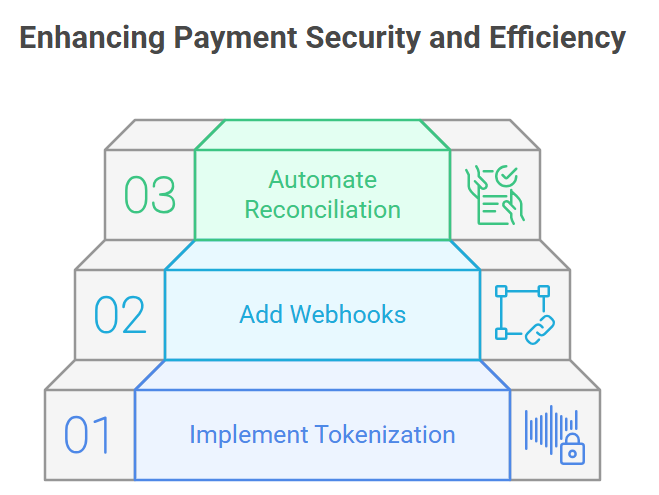

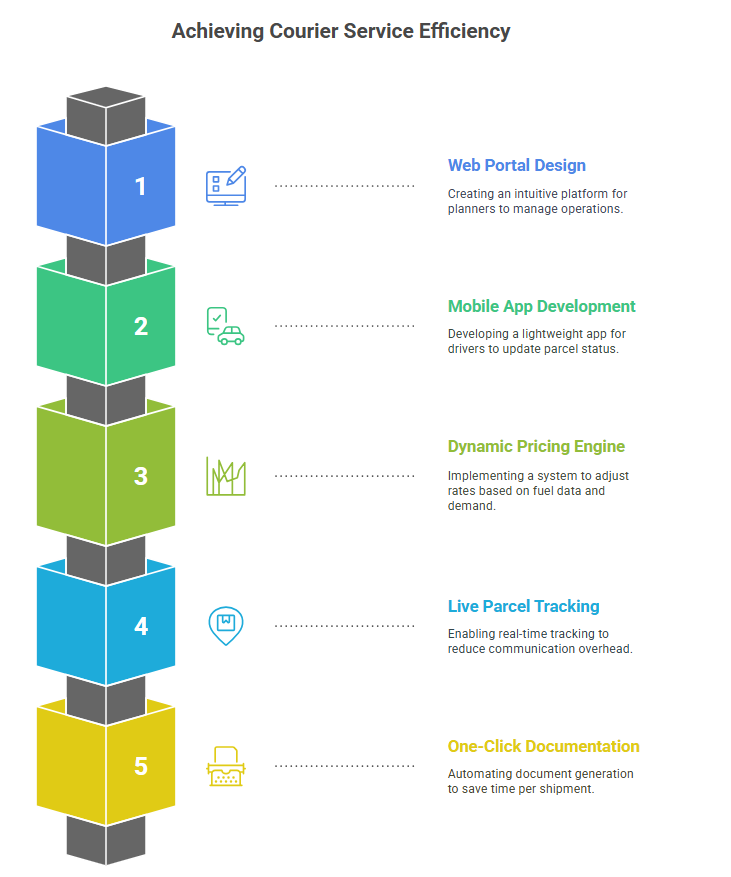

Solution – Cloud-Based Travel Management Platform

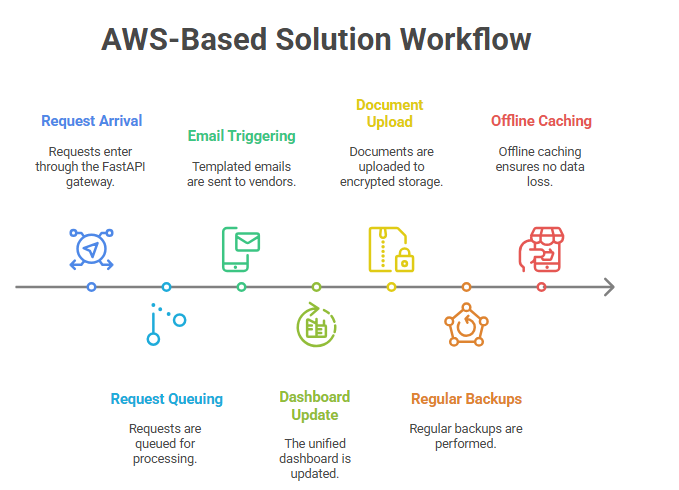

We built the solution on AWS, using AWS Lambda for compute power, Amazon API Gateway for routing, and managed services for secure storage and backups. FastAPI received requests, queued them for processing, triggered vendor emails, and updated a unified dashboard in real time. Consultants uploaded documents to encrypted storage, with regular automated backups. Offline caching on the mobile app ensured the system retained confirmations made at sea.

Features – Automated Bookings and Vendor Alerts

Consultants submitted a single booking form. The system verified documents, contacted vendors (retrying if needed), and posted confirmed trips directly to the shared calendar. Mobile alerts prompted consultants to confirm departures and arrivals, eliminating hundreds of manual emails and spreadsheet lookups.

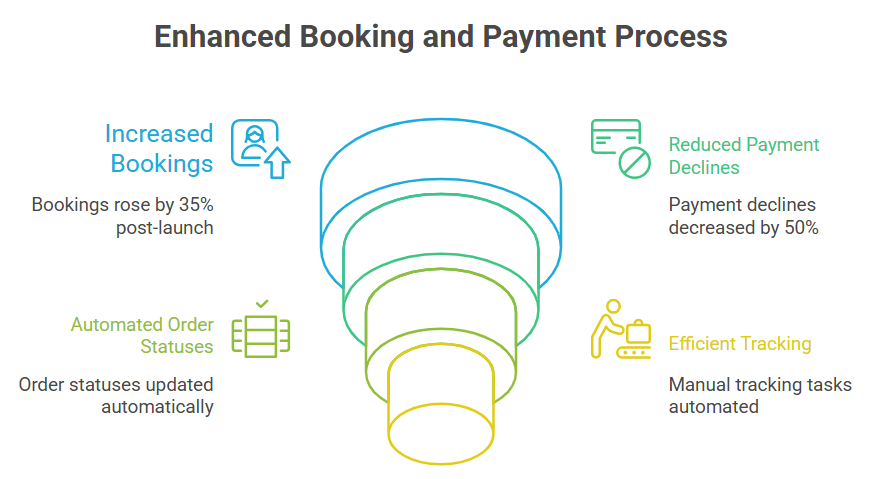

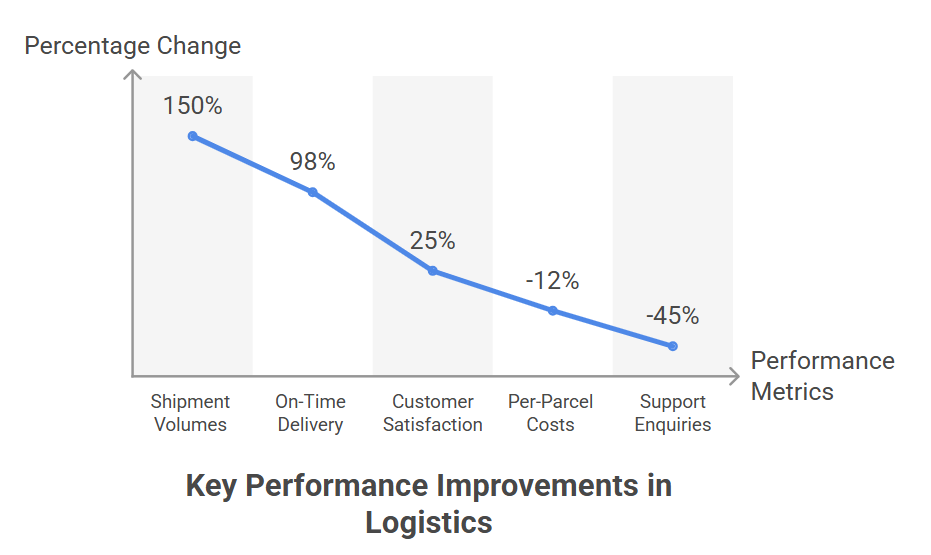

Results – Faster Travel Bookings and Higher Compliance

Time-tracking data confirmed that the team saved valuable hours each month, shifting their focus to higher-value tasks. Reminder emails dropped significantly, reducing inbox clutter. Confirmations that used to take weeks now arrive in days. Document-expiry alerts now cover nearly all necessary updates, improving compliance and reducing risk. Logs showed the system could scale across more consultants without additional staffing—an essential gain for growing travel operations.

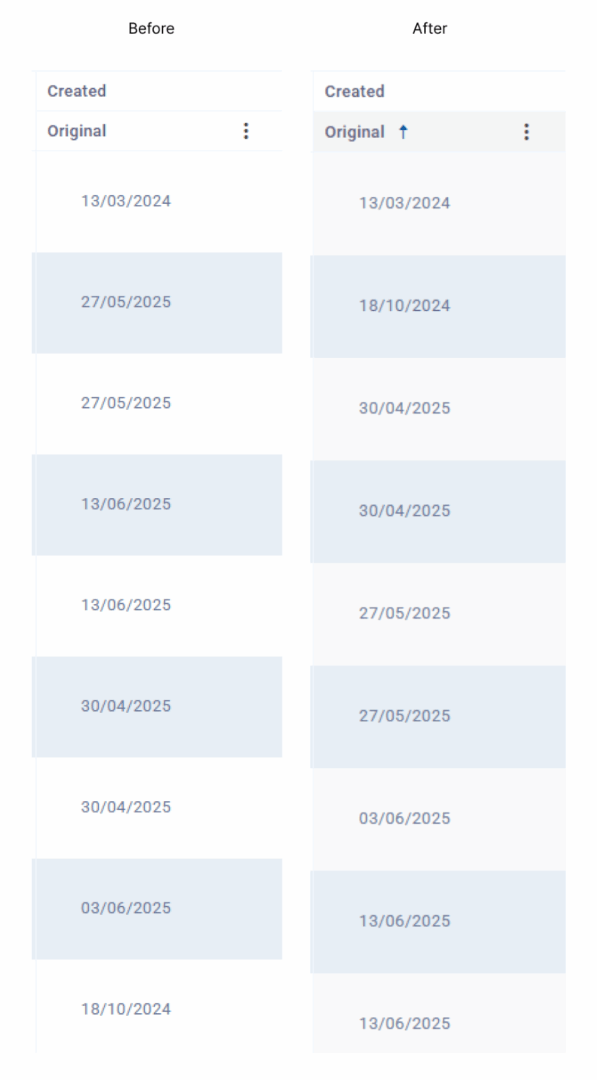

Highlights – Synchronisation and Audit Logging

During beta testing, some consultants experienced clock skew while working offline. The app flagged these issues, prompted users to confirm changes, recorded both timestamps for audit, and pushed alerts to Grafana-based dashboards.

Lessons Learned – User Feedback Drives Better Travel Systems

Allowing the coordinator to edit templates directly within the portal eliminated most support tickets. Early user demos helped us identify the need for multi-leg itineraries, which we addressed early to avoid future rework.

Next Steps – Smarter, Scalable Travel Operations

We plan to add live weather updates and vessel-tracking feeds, launch a self-service vendor portal, and introduce multilingual support to prepare for international expansion.

Conclusion – Digital Transformation for Travel Operations

By replacing complex spreadsheets and excessive emails with a streamlined automated workflow, the client transformed their travel operations. They improved compliance, reduced turnaround times, and built a strong foundation for scalable growth.

Ready to streamline your operations? Get in touch with us today to see how we can cut turnaround times, boost compliance, and free your team from manual workflows.